本站文章除注明转载外,均为本站原创: 转载自love wife & love life —Roger 的Oracle技术博客

2014年11月8号21点左右某客户的数据库集群出现swap耗尽的情况,导致数据库无法正常使用。此时Oracle告警日志的错误如下:

Sat Nov 08 20:48:36 CST 2014 Thread 1 advanced to log sequence 10722 (LGWR switch) Current log# 2 seq# 10722 mem# 0: /dev/rlvxxxredo121 Current log# 2 seq# 10722 mem# 1: /dev/rlvxxxredo122 Sat Nov 08 20:50:23 CST 2014 Process startup failed, error stack: Sat Nov 08 20:50:41 CST 2014 Errors in file /oracle/product/10.2.0/admin/xxx/bdump/xxx1_psp0_1835540.trc: ORA-27300: OS system dependent operation:fork failed with status: 12 ORA-27301: OS failure message: Not enough space ORA-27302: failure occurred at: skgpspawn3 Sat Nov 08 20:50:41 CST 2014 Process m000 died, see its trace file Sat Nov 08 20:50:41 CST 2014 ksvcreate: Process(m000) creation failed 。。。。。。。 Sat Nov 08 21:51:33 CST 2014 Thread 1 advanced to log sequence 10745 (LGWR switch) Current log# 1 seq# 10745 mem# 0: /dev/rlvxxxredo111 Current log# 1 seq# 10745 mem# 1: /dev/rlvxxxredo112 Sat Nov 08 21:59:20 CST 2014 Process startup failed, error stack: Sat Nov 08 21:59:21 CST 2014 Errors in file /oracle/product/10.2.0/admin/xxx/bdump/xxx1_psp0_1835540.trc: ORA-27300: OS system dependent operation:fork failed with status: 12 ORA-27301: OS failure message: Not enough space ORA-27302: failure occurred at: skgpspawn3 Sat Nov 08 21:59:21 CST 2014 Process PZ95 died, see its trace file 。。。。。。 Process PZ95 died, see its trace file Sat Nov 08 22:04:09 CST 2014 Process startup failed, error stack: Sat Nov 08 22:04:09 CST 2014 Errors in file /oracle/product/10.2.0/admin/xxx/bdump/xxx1_psp0_1835540.trc: ORA-27300: OS system dependent operation:fork failed with status: 12 ORA-27301: OS failure message: Not enough space ORA-27302: failure occurred at: skgpspawn3 Sat Nov 08 22:04:10 CST 2014 Process PZ95 died, see its trace file Sat Nov 08 22:06:11 CST 2014 Thread 1 advanced to log sequence 10747 (LGWR switch) Current log# 3 seq# 10747 mem# 0: /dev/rlvxxxredo131 Current log# 3 seq# 10747 mem# 1: /dev/rlvxxxredo132 Sat Nov 08 22:41:05 CST 2014

根据数据库alert log的报错信息,我们可以判断,在8号20:56左右开始出现ORA-27300以及ORA-27301错误,根据Oracle MOS 文档

Troubleshooting ORA-27300 ORA-27301 ORA-27302 errors [ID 579365.1]的描述,我们可以知道,这个错误产生的原因就是内存不足导致.

出现该错误的主机为Oracle RAC的xxx1节点。该主机物理内存大小为96G,Oracle SGA配置为30G,PGA配置为6GB,操作系统Swap配置为16GB。

正常情况下,物理主机的内存是可以满足正常使用的。由于在20:56开始出现无法fork 进程,即使无法分配内存资源,说明在该时间点之前

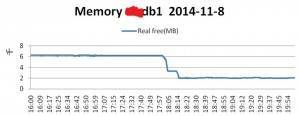

物理主机的内存使用已经出现问题了。通过Nmon 监控,我们可以看到如下的数据:

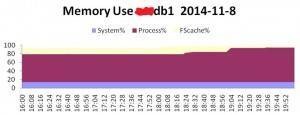

我们可以看到,xxxdb1主机的物理内存从18:01分开始突然下降的很厉害,到18:14左右时,物理内存free Memory已经不足2GB了。而该主机的物理内存中,大部分为Process%所消耗,如下:

我们可以看到,该节点从18:20左右Process% 所消耗的内存开始突然增加,到19:52分时,基本上消耗了所有的物理内存。

这里我们需要注意的,这里的Process% 内存消耗,是指该主机上的所有应用程序的进程消耗,包括oracle的所有进程,以及其他应用程序的进程。

我们根据Oracle 的AWR历史数据进行查询,发现并没有明显的会话消耗内存很高的情况,如下:

SQL> select INSTANCE_NUMBER,snap_id,sum(value/1024/1024) from dba_hist_sga where snap_id > 13553 and snap_id < 13558 2 group by INSTANCE_NUMBER,snap_id order by 1,2; INSTANCE_NUMBER SNAP_ID SUM(VALUE/1024/1024) --------------- ---------- -------------------- 1 13554 30720 1 13555 30720 1 13556 30720 2 13554 30720 2 13555 30720 2 13556 30720 2 13557 30720

从Oracle的历史视图我们也发现操作系统在这段时间段出现了大量的swap操作,如下:

SQL> select INSTANCE_NUMBER,SNAP_ID,STAT_NAME,value from dba_hist_osstat 2 where stat_name like 'VM%' and snap_id > 13550 and snap_id < 13559 3 order by 1,2; INSTANCE_NUMBER SNAP_ID STAT_NAME VALUE --------------- ---------- ---------------- ------------------ 1 13551 VM_IN_BYTES 4691725926408 1 13551 VM_OUT_BYTES 14798577905664 1 13552 VM_OUT_BYTES 14799491960832 1 13552 VM_IN_BYTES 4691727376392 1 13553 VM_OUT_BYTES 14800572719088 1 13553 VM_IN_BYTES 4691727429624 1 13554 VM_IN_BYTES 4691777949696 1 13554 VM_OUT_BYTES 14820690083832 1 13555 VM_OUT_BYTES 14857568350200 1 13555 VM_IN_BYTES 4693160173560 1 13556 VM_OUT_BYTES 14876324397048 1 13556 VM_IN_BYTES 4695865995264 1 13558 VM_OUT_BYTES 14882330329080 1 13558 VM_IN_BYTES 4829460062208 2 13551 VM_OUT_BYTES 2273165344776 2 13551 VM_IN_BYTES 347420766216 2 13552 VM_OUT_BYTES 2273229529104 2 13552 VM_IN_BYTES 347420766216 2 13553 VM_OUT_BYTES 2273286496272 2 13553 VM_IN_BYTES 347420766216 2 13554 VM_OUT_BYTES 2324453691408 2 13554 VM_IN_BYTES 347433598968 2 13555 VM_IN_BYTES 347559141384 2 13555 VM_OUT_BYTES 2383075213320 2 13556 VM_IN_BYTES 347674648584 2 13556 VM_OUT_BYTES 2430000705552 2 13557 VM_IN_BYTES 473531183112 2 13557 VM_OUT_BYTES 2499316277256 2 13558 VM_OUT_BYTES 2507250249744 2 13558 VM_IN_BYTES 473575673856 30 rows selected.

上述数据为累积数据,我们需要前后相减进行计算,如下:

+++ 16:00 --17:00点 SQL> select (4691727376392-4691725926408)/1024/1024 from dual; (4691727376392-4691725926408)/1024/1024 --------------------------------------- 1.3828125 SQL> select (14799491960832-14798577905664)/1024/1024 from dual; (14799491960832-14798577905664)/1024/1024 ----------------------------------------- 871.710938 ---换出的内存 +++ 17:00 --18:00点 SQL> select (4691727429624-4691727376392)/1024/1024 from dual; (4691727429624-4691727376392)/1024/1024 --------------------------------------- .050765991 SQL> select (14800572719088-14799491960832) /1024/1024 from dual; (14800572719088-14799491960832)/1024/1024 ----------------------------------------- 1030.69139 +++ 18:00 --19:00点 SQL> select (4691777949696-4691727429624)/1024/1024 from dual; (4691777949696-4691727429624)/1024/1024 --------------------------------------- 48.1796951 SQL> select (14820690083832-14800572719088)/1024/1024 from dual; (14820690083832-14800572719088)/1024/1024 ----------------------------------------- 19185.4141 +++ 19:00 --20:00点 SQL> select (4693160173560-4691777949696)/1024/1024 from dual; (4693160173560-4691777949696)/1024/1024 --------------------------------------- 1318.1914 SQL> select (14857568350200-14820690083832)/1024/1024 from dual; (14857568350200-14820690083832)/1024/1024 ----------------------------------------- 35169.8555 +++ 20:00 --21:00点 SQL> select (4695865995264-4693160173560)/1024/1024 from dual; (4695865995264-4693160173560)/1024/1024 --------------------------------------- 2580.47266 SQL> select (14876324397048-14857568350200)/1024/1024 from dual; (14876324397048-14857568350200)/1024/1024 ----------------------------------------- 17887.1602

从数据库层面,我们没有发现内存消耗较高的会话或应用SQL,如下:

SQL> select INSTANCE_NUMBER,snap_id,sum(SHARABLE_MEM)/1024/1024 from dba_hist_sqlstat 2 where snap_id > 13550 and snap_id < 13558 3 group by INSTANCE_NUMBER,snap_id 4 order by 1,2; INSTANCE_NUMBER SNAP_ID SUM(SHARABLE_MEM)/1024/1024 --------------- ---------- --------------------------- 1 13551 28.9083166 1 13552 30.0213976 1 13553 28.7059259 1 13554 29.1716347 1 13555 29.1961374 1 13556 35.6658726 2 13551 19.5267887 2 13552 20.9447975 2 13553 23.5789862 2 13554 21.0861912 2 13555 22.5129433 2 13556 23.0631037 2 13557 21.7776823 13 rows selected. SQL> select INSTANCE_NUMBER,snap_id,sql_id,SHARABLE_MEM/1024/1024 from dba_hist_sqlstat 2 where snap_id > 13550 and snap_id < 13558 and SHARABLE_MEM > 1000000 3 order by 1,2,4; INSTANCE_NUMBER SNAP_ID SQL_ID SHARABLE_MEM/1024/1024 --------------- ---------- ------------- ---------------------- 1 13551 bk0cum2bjs784 3.60285664 1 13551 a62fjfn56x5k7 5.38305569 1 13552 bk0cum2bjs784 3.61285877 1 13552 a62fjfn56x5k7 5.38305569 1 13553 bk0cum2bjs784 3.61285877 1 13553 a62fjfn56x5k7 5.52838802 1 13554 bk0cum2bjs784 3.61285877 1 13554 a62fjfn56x5k7 5.67374325 1 13555 bk0cum2bjs784 3.70570087 1 13555 a62fjfn56x5k7 5.52840328 1 13556 czj7c4r6q1vr2 1.528368 1 13556 bk0cum2bjs784 3.70570087 1 13556 a62fjfn56x5k7 6.25511074 2 13551 bk0cum2bjs784 1.26796436 2 13551 3j9yx7t5abcyg 1.65198135 ........ 2 13557 bk0cum2bjs784 1.37086964 2 13557 a62fjfn56x5k7 1.74954891 31 rows selected.

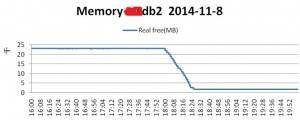

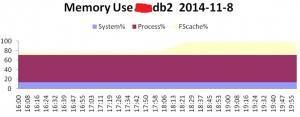

查询18点至21点的历史数据,我们都没有发现会话消耗内存很高的情况。据业务了解,xxxdb2节点每周6会进行一个批量的操作,可能会产生影响。基于这一点,我们分析了xxxdb2节点的nmon数据,如下:

从xxxdb2节点的数据来看,内存的变化也是在18:00左右,然而变化的却是FScache%, Process%的指标是没有变化的。根据这一点,我们可以判断,在该时间段内数据库可能有一些大的操作,产生了大量的归档日志。根据查下,发现确实这段时间产生的日志相对较多:

INSTANCE_NUMBER SNAP_ID FIRST_TIME SEQUENCE# --------------- ---------- ------------------- ---------- 2 13552 2014-11-08 01:26:46 5220 2 13552 2014-11-08 01:48:32 5221 2 13552 2014-11-08 02:33:27 10672 2 13552 2014-11-08 02:33:29 5222 2 13552 2014-11-08 02:44:33 10673 2 13552 2014-11-08 03:01:24 10674 2 13552 2014-11-08 11:03:03 10675 2 13552 2014-11-08 11:03:06 5223 2 13553 2014-11-08 01:26:46 5220 2 13553 2014-11-08 01:48:32 5221 2 13553 2014-11-08 02:33:27 10672 2 13553 2014-11-08 02:33:29 5222 2 13553 2014-11-08 02:44:33 10673 2 13553 2014-11-08 03:01:24 10674 2 13553 2014-11-08 11:03:03 10675 2 13553 2014-11-08 11:03:06 5223 2 13554 2014-11-08 18:46:54 10688 2 13554 2014-11-08 18:50:24 10689 2 13554 2014-11-08 18:54:04 10690 2 13554 2014-11-08 18:55:21 5268 2 13554 2014-11-08 18:56:32 5269 2 13554 2014-11-08 18:57:43 5270 2 13554 2014-11-08 18:57:46 10691 2 13554 2014-11-08 19:00:06 5271 2 13555 2014-11-08 19:48:46 10706 2 13555 2014-11-08 19:52:10 10707 2 13555 2014-11-08 19:55:37 10708 2 13555 2014-11-08 19:57:51 5323 2 13555 2014-11-08 19:58:55 5324 2 13555 2014-11-08 19:58:56 10709 2 13555 2014-11-08 19:59:59 5325 2 13555 2014-11-08 20:01:05 5326

由于在该时间段产生了大量的操作,因此就可能就会产生大量的gc 等待,从节点2的awr数据来看,确实产生了大量的gc等待事件,如下:

Event Waits Time(s) Avg Wait(ms) % Total Call Time Wait Class gc buffer busy 11,042,588 20,687 2 28.1 Cluster gc current block 2-way 1,113,439 16,922 15 23.0 Cluster gc current block congested 19,115 10,336 541 14.1 Cluster CPU time 9,914 13.5 gc cr multi block request 6,430,854 3,965 1 5.4 Cluster

那么这里有没有可能是由于大量gc 的产生,导致Oracle RAC的核心进程lms等进程的负载增加,导致内存消耗的剧增呢?

实际上,这一点是可以排除的,如果是Oracle的进程内存消耗较高,那么节点xxxdb2节点的内存消耗变动,在18:00左右开始上升的应该是Process%,而不是FScache%.

到这里我们可以总结如下:

故障时间段:

1) 节点1 内存耗光,主要是Process %消耗

2) 节点2的内存的变化主要是发生在FScache%.

基于这两点,我们可以排除是数据库本身的进程消耗的大量内存导致swap被耗尽。

另外,由于节点1部署了Oracle Goldengate同步软件,所以我们怀疑急有可能是该软件导致,基于这个猜想,我们进行了简单分析。

[oracle@xxxdb1]$ps gv|sort -rn +6|head -20 2556640 - A 39:42 996 113352 120200 xx 10626 6848 0.0 0.0 /ggs/e 6750342 - A 28:39 0 68968 117608 xx 90796 48640 0.0 0.0 oracle 7078566 - A 55:18 0 63304 111944 xx 90796 48640 0.1 0.0 oracle 6881426 - A 6:43 0 61312 109952 xx 90796 48640 0.0 0.0 oracle 6815884 - A 28:24 0 61092 109732 xx 90796 48640 0.0 0.0 oracle 5964700 - A 64:18 0 57524 106164 xx 90796 48640 0.1 0.0 oracle 7078060 - A 8:41 0 49140 97780 xx 90796 48640 0.0 0.0 oracle 5375364 - A 0:26 0 40496 89136 xx 90796 48640 0.0 0.0 oracle 1638454 - A 1:26 2531 77564 77656 xx 79 92 0.0 0.0 /var/o 10617338 - A 80:53 24 27272 75912 xx 90796 48640 0.1 0.0 oracle 2688502 - A 10:01 107 67088 73936 xx 10626 6848 0.0 0.0 /ggs/e 2425150 - A 5:26 58 66604 73452 xx 10626 6848 0.0 0.0 /ggs/e 3080268 - A 10:53 370 66052 72900 xx 10626 6848 0.0 0.0 /ggs/e ........ [oracle@xxxdb1]$ps -ef|grep 2556640 oracle 3211314 2556640 0 Nov 10 - 1:34 oraclexxx1 (DESCRIPTION=(LOCAL=YES)(ADDRESS=(PROTOCOL=beq))) oracle 2556640 983528 0 Nov 10 - 39:45 /ggs/extract PARAMFILE /ggs/dirprm/extxxxn.prm REPORTFILE /ggs/dirrpt/EXTxxxN.rpt PROCESSID EXTxxxN USESUBDIRS oracle 2949672 2556640 0 Nov 10 - 2:42 oraclexxx1 (DESCRIPTION=(LOCAL=YES)(ADDRESS=(PROTOCOL=beq))) oracle 3212464 2556640 0 Nov 10 - 1:49 oraclexxx1 (DESCRIPTION=(LOCAL=YES)(ADDRESS=(PROTOCOL=beq)))

我们可以看到,当前时间点ogg的其中一个抽取进程的内存消耗都超过110M。 进一步我们检查该进程的report日志,发现在故障时间段内该进程的操作是十分频繁的:

2014-11-08 14:15:52 INFO OGG-01738 BOUNDED RECOVERY: CHECKPOINT: for object pool 2: p10617402_Redo Thread 2: start=SeqNo: 5223, RBA: 323015184, SCN: 10.2497831943 (45447504903), Timestamp: 2014-11-08 14:15:49.000000, end=SeqNo: 5223, RBA: 323059712, SCN: 10.2 497831972 (45447504932), Timestamp: 2014-11-08 14:15:50.000000, Thread: 2. Wildcard TABLE resolved (entry ds_h.*): table "DS_H"."BD_DIVIDEND_CONFIRM$"; Using the following key columns for source table DS_H.BD_DIVIDEND_CONFIRM$: DIVIDEND_CONFIRM_ID. 2014-11-08 18:01:38 INFO OGG-01026 Rolling over remote file ./dirdat/nt006444. 2014-11-08 18:03:43 INFO OGG-01026 Rolling over remote file ./dirdat/nt006445. 2014-11-08 18:04:05 INFO OGG-01026 Rolling over remote file ./dirdat/nt006446. 2014-11-08 18:04:28 INFO OGG-01026 Rolling over remote file ./dirdat/nt006447. 2014-11-08 18:04:50 INFO OGG-01026 Rolling over remote file ./dirdat/nt006448. 2014-11-08 18:13:18 INFO OGG-01026 Rolling over remote file ./dirdat/nt006449. 2014-11-08 18:14:26 INFO OGG-01026 Rolling over remote file ./dirdat/nt006450. 2014-11-08 18:14:58 INFO OGG-01026 Rolling over remote file ./dirdat/nt006451. 2014-11-08 18:15:23 INFO OGG-01026 Rolling over remote file ./dirdat/nt006452. 2014-11-08 18:16:01 INFO OGG-01738 BOUNDED RECOVERY: CHECKPOINT: for object pool 1: p10617402_Redo Thread 1: start=SeqNo: 10679 , RBA: 511504, SCN: 10.2501163534 (45450836494), Timestamp: 2014-11-08 18:15:58.000000, end=SeqNo: 10679, RBA: 517632, SCN: 10.25011 65080 (45450838040), Timestamp: 2014-11-08 18:15:59.000000, Thread: 1. 2014-11-08 18:16:01 INFO OGG-01738 BOUNDED RECOVERY: CHECKPOINT: for object pool 2: p10617402_Redo Thread 2: start=SeqNo: 5233, RBA: 489575952, SCN: 10.2500983614 (45450656574), Timestamp: 2014-11-08 18:13:28.000000, end=SeqNo: 5235, RBA: 170145608, SCN: 10.2 501166449 (45450839409), Timestamp: 2014-11-08 18:16:00.000000, Thread: 2. 2014-11-08 18:17:19 INFO OGG-01026 Rolling over remote file ./dirdat/nt006453. 2014-11-08 18:17:43 INFO OGG-01026 Rolling over remote file ./dirdat/nt006454. 2014-11-08 18:18:05 INFO OGG-01026 Rolling over remote file ./dirdat/nt006455. 2014-11-08 18:18:25 INFO OGG-01026 Rolling over remote file ./dirdat/nt006456. 2014-11-08 18:19:15 INFO OGG-01026 Rolling over remote file ./dirdat/nt006457. 2014-11-08 18:19:35 INFO OGG-01026 Rolling over remote file ./dirdat/nt006458. 2014-11-08 18:19:54 INFO OGG-01026 Rolling over remote file ./dirdat/nt006459. 2014-11-08 18:25:59 INFO OGG-01026 Rolling over remote file ./dirdat/nt006460. ........ ........ 2014-11-08 20:38:18 INFO OGG-01026 Rolling over remote file ./dirdat/nt006551. 2014-11-08 20:39:34 INFO OGG-01026 Rolling over remote file ./dirdat/nt006552. 2014-11-08 20:52:02 INFO OGG-01026 Rolling over remote file ./dirdat/nt006553.

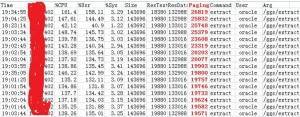

我们可以发现,在故障时间段内该进程的操作明显要比其他时间段要频繁的多,由于ogg本身也是部署在oracle用户下,因此这也不难解释为什么xxxdb1节点从该时间点开始内存的消耗会突然增加,而且nmon监控显示是Process%消耗增加。通过Nmon的监控数据,最后我们发现paging的操作主要是goldengate的extract进程导致,如下:

Goldengate进程之所以会消耗大量的内存,是因为extract进程首先需要将数据读取到本地节点的Memory中(即Goldengate的cachesize),然后将

cache中的数据解析为Goldengate特有的日志格式,并写入到trail文件中。如果日志量较大,那么Goldengate的抽取就就会出现延迟,即解析的

速度跟不上读取的速度,这会导致内存的消耗不断增大。上面数据是截取的部分内容,从时间点来看,和之前的nmon监控Memory的变化是完全符合的。

因此,我们认为11月8号的故障原因本质是由于数据库归档产生较大,导致Goldengate抽取进程消耗大量内存,最后产生大量的swap。

Related posts: